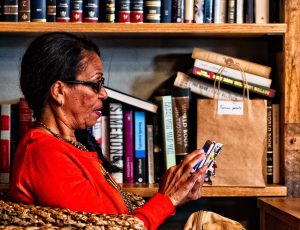

The number of patients treated in-home is rapidly increasing in several countries, while the elderly population has expanded in the 15 years. To help elderly patients preserve their autonomy and live at home longer, some receive treatment at home. In this context, they may experience a critical situation that will require further medical assistance.

Advances in ubiquitous computing and the Internet of Things (IoT) have provided effective and cheap equipment, including wireless communication, sensors, and cameras (e.g., smartphones or embedded devices). Embedded computing enables the deployment of Health Smart Homes (HSH), which can support in-home medical treatment. The use of cameras and image processing in IoT has not been fully explored in the context of HSH[I].

The use of Health Smart Homes is promising. This concept has emerged from a combination of telemedicine, domotics products, and information systems. It can be defined as a Smart Home equipped with specialised devices for remote healthcare.

In view of this, TeNDER is close to completing five large-scale pilots targeting patients with Alzheimer’s, cardiovascular diseases, and/or Parkinson’s. In each pilot setting (i.e., in-hospital, at home, and in day- and full-time care homes), patients have been monitored using sensors, cameras that capture movement, affective recognition technology, wristbands that record basic vitals, etc.

Our assistive technological system includes devices, mainly sensors, and actuators, which can act whenever a critical situation is detected, helping caregivers monitor people in need.

The assistive technology systems Datawizard implemented within the TeNDER project consists of three modules dedicated to patient interaction and tracking:

Chit-Chat module

The patient pronounces keywords to activate a virtual assistant that will answer the specific question and, if necessary, send a contact request to the healthcare provider via the app.

How does it work?

The assistant consists of a set of commands that can be triggered either by voice (Speech Analysis) or by an event detected by a sensor (API Information).

On the one hand, the assistant relies on speech analysis, as it continuously records the audio stream from the microphone, which is sent to the Google API that translates it into text. The assistant then activates the corresponding routine and sends a notification to the assistant when needed.

On the other hand, it also relies on API information, as it regularly checks the HAPI-FHIR API; and if a new event is detected (irregular heart rate, number of daily steps too low, etc.), it asks the patient if everything is OK and/or sends a notification to the assistant.

Vocal Reminder

It retrieves reminders from the Android TeNDER App calendar and plays them at the established time on the client speakers using Microsoft Text-To-Speech Engine.

How does it work?

The reminder module consists of a set of regularly executed tasks, which communicate with the TeNDER App via HAPI-FHIR API Interface. The reminder module checks for new or edited calendar reminders and copies them into a local SQLite Database.

If a non-scheduled reminder is found, the module will schedule the corresponding “speak” task. If the user has set a vocal notice for the event on the TeNDER app (i.e., one day before the actual event), there will also be a notice “speak” task.

Each “speak” task will use the default host audio speakers and will read what the user has written in the “Info” field of the TeNDER reminder via the Microsoft Text-To-Speech engine. The module also keeps the local SQLite Database clean by regularly detecting and removing expired or deleted reminders.

Mood Recognition

The mood recognition module reads the audio (wav) that is saved by the chit-chat module. It is the only module that accesses the microphone and gives them inputs to a neural network that outputs the mood recognition.

Hence, every time that audio is deemed relevant for mood recognition and saved to the corresponding folder, this module will process it and classify the emotion as laugh, cry, or other.

How does it work?

The chit-chat module saves audio only if a set of conditions are met. Audio is dropped if:

- The audio contains only silence or background sound.

- The audio contains speech data (laugh and cry).

In all the other cases, the audio is saved in a shared folder by the chit-chat module, and only in that case, it will be given as input to the neural network. This pre-processing is done both to avoid mistakes in the predictions and to save energy. Indeed, every time a mood is recognised, the neural network initiates, which increases the machine’s consumption power and affects its performance.

Thanks to these and other functionalities, the project aims to give elderly people suffering from disease some independence to increase self-reliance and improve the quality of life even of those who surround them.

Our project consortium seeks to empower patients, helping them to monitor their health and manage their social environments, prescribed treatments, and medical appointments. The system also facilitates communication across each patient’s care pathway.

TeNDER does not replace personal connections: it provides support, giving all users more time to nourish their relationships, engage in activities that are mentally and physically beneficial, and markedly improve people’s quality of life.

[I] Source: https://www.sciencedirect.com/science/article/abs/pii/S0140366416300688.